Author: Owen Camden, Source: www.eetimes.com

User interface is an extremely important aspect of any vehicle “experience.” Obviously, electromechanical components, such as switches, in automotive applications must provide high reliability and long operating life. But these switches must also add to the look (design) and feel (functionality) of a vehicle’s keyfob, center console, steering wheel and other in-cabin interfaces.

Known in the industry as “haptics,” the touch, feel, and sound of a switch’s actuation are paramount to a user’s experience and impression of a particular vehicle. Automotive design engineers want user interfaces to respond and provide feedback in specific ways that are unique to their vehicle.

Essentially, user feedback is part of the identity of a vehicle and its manufacturer. Haptic features are often customized for specific vehicles and manufacturers, and are often accomplished through advanced switch configurations. These switches must combine ruggedness with design flexibility to meet automotive requirements

Sealing

A commonality of every harsh environment application is the need for ruggedized components that can withstand the brutal environmental conditions. Switches sealed to IP40, IP65, IP67 and IP68 specifications that are resistant to contamination by, dust, dirt, salt, and water are necessary in automotive applications.

Combating environmental elements is often accomplished with switch designs featuring an internal seal to protect the switching mechanism, as well as an external panel seal designed to keep liquids from entering the panel or enclosure. Silicon rubber caps are also employed to prevent the ingress of fluids that could adversely affect the function of standard switches. Additionally, the materials used in these switches, such as PBT (polybutylene terephtalate), must be robust under exposure to chemicals, water, and other potential contaminants.

Rugged switch designs for automotive vehicles also need to be resistant to extreme temperatures, vibration, and shock. Depending on the specific application within or outside the vehicle, advanced snap-acting, tactile, pushbutton, and toggle switches are available with IP67/IP68 ratings that provide high levels of reliability and predictability.

Reliability and predictable behavior is directly linked to the compatibility of the switch based on the mechanical environment of the unit and the final OEM specification. Switch manufacturers must simulate the potential environmental conditions a switch will encounter over its operating life to ensure their design achieves the specified robustness.

Haptics

As the gateway to a user interface, a switch configuration must have a desirable appearance, feel, and sound. Switch manufactures must work closely with automotive makers to optimize aesthetics, ergonomics, and performance.

For typical switching functions in automotive applications, such as front-panels and dashboards, robust pushbutton switches are often utilized. Sealed up to IP68-ratings, pushbutton switches can be designed to provide excellent tactile feedback with specific travel and actuation forces. Pushbutton switches also provide a long operating life of more than one million cycles, making them to be well-suited for use in automotive vehicles.

Ultra-miniature tactile switches are ideal for keyfobs, as they combine a high-operating life with long-term reliability and low current compatibility, while a snap-switch would most likely be used in a vehicle’s fob reader. Both switches can be customized to provide precise feedback.

The audible response of a switch is another important way for vehicle manufacturers to differentiate their vehicles. This feature can be customized to deliver a specified sound or click when actuated. Automotive OEMs are focusing more on these sound responses and consider the acoustic response as part of their branding. The audible response is highly dependent on the switch design, and vehicle manufacturers need to get the same sound response for all units, regardless of the advanced switch configuration.

In order to provide solutions that deliver repeatable audible responses, switch manufacturers have developed established platforms that allow designs to carry over to different applications. These switches provide high-reliability and consistent haptics in an “automotive proven” series, while also achieving drastically reduced development cost and time.

Implementing new technologies

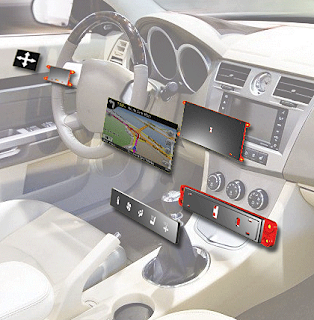

Consumers are by now familiar with touch screens in their smart phones, vehicle infotainment systems, navigation/GPS systems, and even computer monitors. Similarly, touch sensing controls, including tactile screens, can now be implemented into almost any electronic device, including center console interfaces. The “clicker” function (see below) can be enabled with any touch-sensitive surface, and provides uniform haptics over any surface. The simple mechanical system integrates low-profile tactile switches with the actuating superstructure to provide a clickable touch sensing system.

Based on a structure with supporting points, the actuator collects and transmits the force from the touching surface to the switch with a minimum of distortion or power consumption. Pressure on any surface will apply a force on the supporting points at the edge, which will then in turn send back the force to middle arms and then to the appropriate tact switch. The technology is more efficient than “hinged” solutions by providing a smooth and uniform click. Multiple configurations, structures and profiles can be developed depending on the application and room available for integration, from 2.5 to 10 mm.

In addition, the clicker technology can combined with multiple key areas based on the same actuating surface. The keys/buttons are managed via touch sensing in that each area is used for pre-selection of the function. Selecting the function is managed by actuation on the whole surface. The main benefit is that instead of managing separated keys/buttons, the unit can be managed by a single flush surface with haptics, reducing integration issues and simplifying some features like key alignment, backlighting, and tactile difference between keys.

Assembly

In terms of assembly and maintenance, electromechanical components for automotive applications often feature quick connections that support modular installation so that they can be plugged into the panel and locked firmly into place, simplifying both assembly and maintenance. This arrangement also lets the OEM store individual switch elements separately and configure them during final assembly to meet application-specific needs, while saving storage space and costs.

One such example of this is seen with illumination, as automotive vehicles contain a large number of visual indicators for informational and safety purposes, signifying the current state or function of vehicle’s features. LED indicators can be mounted independently of the switch and interfaced with an IC that controls it for slow or fast blinking, or to produce various colors that indicate the status.

Adding illumination at the switch level significantly reduces materials costs, as some switch designs with optional illumination allow users to order the base switch with or without the cap, providing the ability to order one base switch together with multiple color caps, or snap-on caps, during the installation process to suit each specific application. This modular solution not only allows OEMs to have fewer part numbers to inventory, thus simplifying materials and assembly processes, but it also gives engineers greater flexibility with circuit and panel designs.

Manufacturers have also developed pushbutton switches that offer panel-mounting options to help simplify assembly. Rear panel-mounting switches, such as C&K’s rugged pushbutton switches, are ideal for applications where multiple switches are going to be mounted to a PCB. Customers can mount them to the PCB first, then the entire assembly can be installed into the armrest or panel. This allows for a much easier installation compared to traditional front mounted switches that require the mounting hardware to be tightened from behind the panel, which is sometimes hard to access; as well as having to run all the leads to the mating connectors on the PCB. Rear mounted switches allow customers to install the hardware from the top and still achieve the same look while simplifying the installation process.

Custom electromechanical solutions

OEMs are approaching electromechanical component manufacturers for more than just switches. By utilizing a switch manufacturer for designs beyond the switch itself, increased flexibility in overall design can be realized. Switch dimensions are becoming more critical, making it imperative to work closely with customers to discern all details. Today, it is less about the switch being mounted to a PC board or adding wire leads or a connector to the switch, and more about defining the issues that need to be solved. Because switch manufacturers are now dealing with the entire module, they are spending an increasing amount of time with customers to determine how the module is being impacted in the application to assess potential challenges that were not previously considered.

By working closely with customers in all phases of the design process, switch manufacturers can identify materials that interface with the operator, and those in the actual contact mechanism can be re-evaluated and altered to conform to performance, reliability, lifespan, and robustness standards. For example, some manufacturers are now offering switch packages with multi-switching capability and high over-travel performance, with various core-switching technologies such as opposing tactile switches or a dome array on a PC board. Such packages often include additional PC board-mounted integrated electronics, custom circuitry, and industry standard connectors for the complete package.

Still other solutions optimized for automobiles, such as interior headliners, feature not only customized switches with insert molded housing and custom circuitry and termination, but also paint and laser etched switch button graphics and decoration capabilities, as well as backlighting for nighttime use. Today, switch manufacturers are doing even these customized graphics and decorations in-house.

Conclusion

Although the electromechanical components are some of the last devices designed or specified into a center console, dashboard, or steering wheel, switches are one of the most important components in a system—and one of the first components a vehicle operator contacts. Each automotive manufacturer has different performance requirements, external presentations, internal spacing, and footprint restrictions. As such, each vehicle requires different packaging and orientation of switches to achieve the functional and performance objectives. Customized haptic options (actuation travel, force, and audible sound), ergonomic options (illumination, decoration, appearance), sealing options, circuitry configurations, housing styles, mounting styles (including threaded or snap-in mounting), and termination options are integral to meeting these application requirements.

Combining innovative electromechanical designs with complete switch assemblies often affords customers greater flexibility and customization options that reduce assembly and manufacturing costs while improving performance and reliability. Working closely with the switch manufacturer to solve design problems and implement customized performance requirements can yield faster time-to-market by streamlining the prototyping and production ramp-up stages, thereby bringing the finished product to market more quickly.